I Hope Apple Improves Voice Recognition…

Here we are on the eve of another Apple press event. There are all kinds of rumors and speculation about what the famous electronic maker will unveil but the one I am most looking forward to is the extensive voice controls supposedly built-in to the core of iOS5.

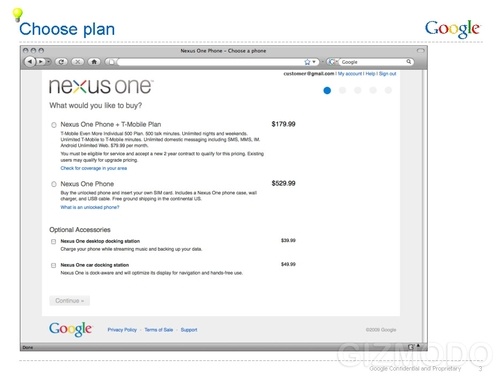

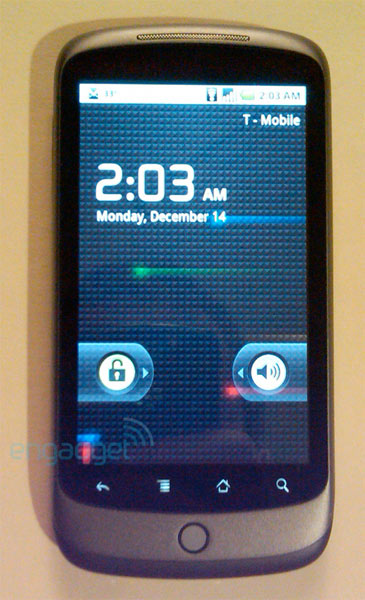

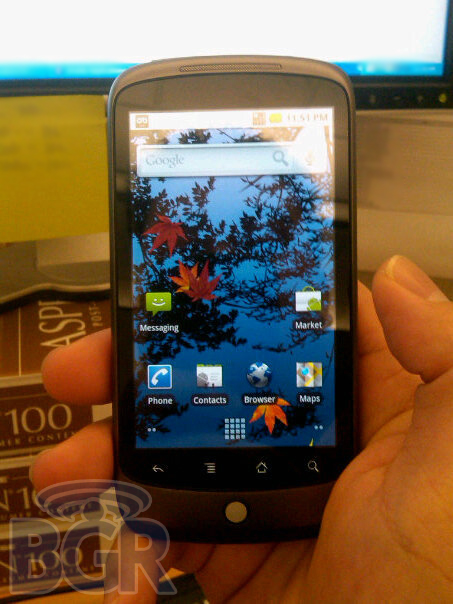

Voice recognition is hard. Google has had the capability built-in to their devices (and even their browser) for some time. The quality of the interpretation is iffy at best. I have never felt like voice commands were worth the effort. When Google guesses wrong you have to go back and correct it’s shoddy work by pecking at the tiny screen to get the text cursor in the right place and delete all of the letters and retype the word you originally meant to input. Like I said, voice recognition is tough.

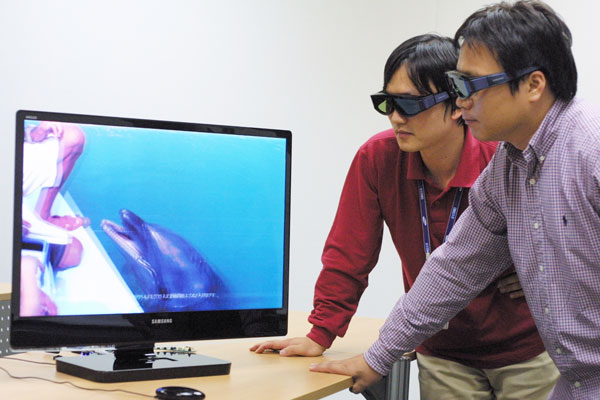

I believe voice is the next big computer interaction. Smartphones have taken off because it allowed us to have a computer in our pocket. The same way we could query the world’s knowledge for answers to our mundane questions at our desks is now available most anywhere we are. But voice will certainly not become the primary form of computer interaction (just imagine a group of cubicle dwellers yelling over one another so their computers can hear them). Instead voice will become prevalent in more intimate settings like in your car (see Ford SYNC) where your hands aren’t free or in your own home.

Imagine being able to ask your computer a question from your couch and having the computer speak the answer back to you. When you’re getting ready in the morning wouldn’t it be great to simply ask aloud “What’s the weather out today?” and then get your answer spoken back to you? This will lead to a more passive computing experience, where it’s just there in the background. Smartphones are a stepping stone to this reality, but I predict it will be at least 5 years until a voice aware home is even viable.

What’s Available Today?

There are a couple of interesting voice automation projects out there today.

CMU Sphinx is an open source toolkit for speech recognition. It’s a bit too complicated for me to get up and running so I could at least play around with it. The home automation project, MisterHouse, uses it for some basic voice commands (video demo).

Windows users have the add-on Bill’s Voice Commander for the Active Home Pro software to do similar things.

Samir Ahmed created the open source Iris project, a Java app for utilizing Speech Recognition and Synthesis to make a desktop assistant. It looks like a really interesting proof-of-concept.

Google uses a web service to convert speech to text which Mike Pultz reverse engineered for his own purposes.